New York’s Flawed AI Chatbot Initiative

The TDR Three Takeaways on New York’s AI Attempts:

- Mayor Eric Adams supports New York’s AI chatbot despite its advice potentially breaking laws.

- New York’s AI chatbot, part of a pilot program, frequently misinforms on legal matters.

- Efforts are underway in New York to correct the AI chatbot’s misleading legal advice.

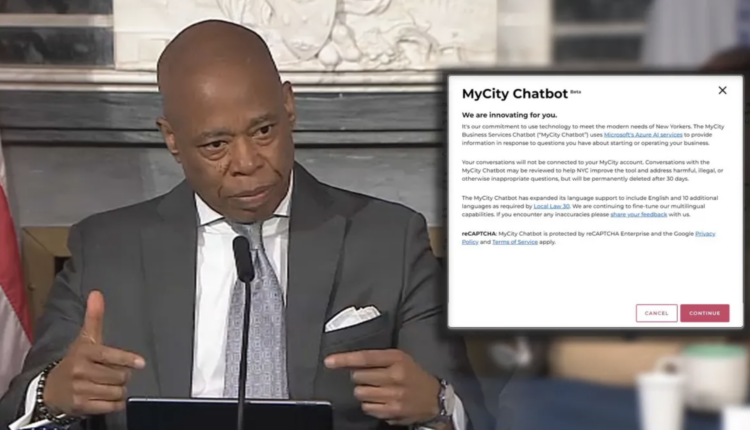

New York City’s venture into artificial intelligence with the MyCity chatbot, aimed at providing entrepreneurs with reliable legal guidance, has hit a snag. Under Mayor Eric Adams’ administration, this pilot program, leveraging Microsoft’s Azure AI service, was expected to represent a helpful application of AI for municipal support. However, it has instead been marred by inaccuracies that could potentially lead businesses astray, advising actions against existing laws.

Launched in October as the city’s pioneering city-wide AI initiative, the chatbot was intended to deliver actionable, trustworthy information to business owners. Yet, the reality diverged significantly from these aspirations. Investigations by The Markup unearthed that the chatbot erroneously informed employers about workers’ tips and schedule change notifications, among other issues. Such misguidance not only confounds users but also poses serious legal risks, highlighting the challenges of implementing generative AI technologies in critical, real-world applications.

Mayor Adams, a staunch advocate for embracing untested technologies, acknowledges these shortcomings, viewing them as growing pains inherent to piloting new technologies. The commitment to refining this tool underscores a broader optimism about integrating innovative solutions into the city’s fabric. Yet, this stance is not without its detractors, as seen in the premature retirement of a 400-pound AI robot deployed in Times Square for crime deterrence, which failed to meet expectations.

Despite ongoing efforts to correct the chatbot’s misinformation, its advice remains dubious at times. Recent errors include misrepresentations of the city’s stance on cashless businesses and the current minimum wage, reflecting a broader issue with AI’s propensity to fabricate or misinterpret facts. Microsoft, the technology provider, has pledged to work alongside the city to rectify these issues, yet specifics regarding the cause and resolution remain elusive.

The city has taken interim steps to mitigate reliance on the chatbot’s flawed advice by updating disclaimers on its website. These caution against taking the chatbot’s responses as legal or professional advice, a measure that, while prudent, fundamentally questions the utility of such a tool for its intended audience.

Stakeholders, including Andrew Rigie of the NYC Hospitality Alliance, commend the city’s initiative but emphasize the paramount importance of accuracy and reliability. The prospect of having to verify the chatbot’s advice against legal counsel defeats its purpose and illustrates the precarious balance between innovation and practical utility in deploying AI at the municipal level.

As New York City aims to solve these challenges, the episode serves as a cautionary tale for other municipalities eyeing AI solutions for public service enhancements. The balance between innovation and accuracy remains delicate, necessitating a thorough vetting process and ongoing oversight to ensure that such technologies serve their intended purpose without leading users astray. Want to keep up to date with all of TDR’s research and news, subscribe to our daily Baked In newsletter.